Test Early, Test Often

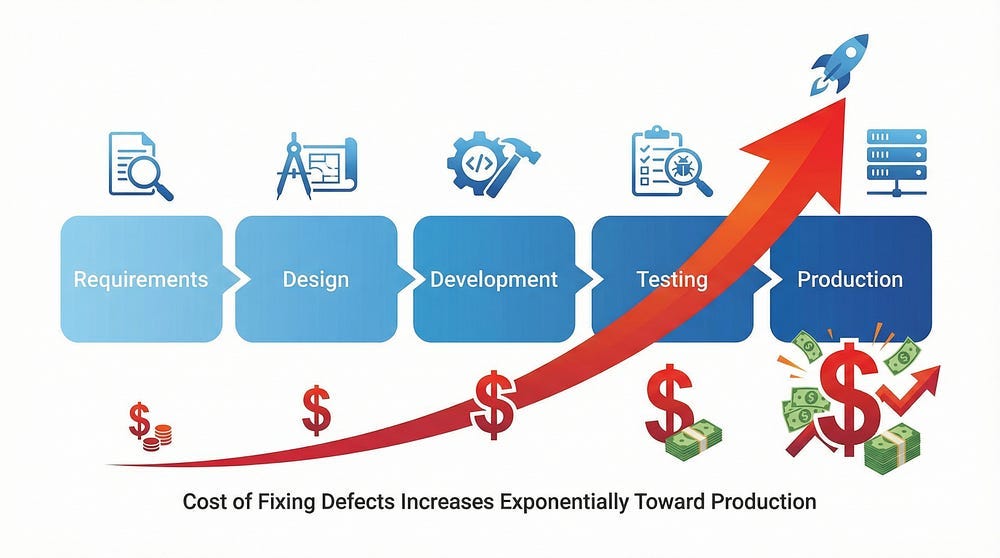

The cost benefits of catching defects sooner

Welcome back to NextGen QA! In our previous articles, we’ve explored what testing is, the guiding principles, the difference between testing and debugging, and the psychology behind effective testing. Today, we’re tackling one of the most important concepts in software quality: when you test matters just as much as how you test.

This isn’t just theory — it’s economics. The timing of when you find and fix defects has a dramatic impact on cost, schedule, and product quality. Understanding this principle will change how you approach testing throughout your career.

Let’s explore why “test early, test often” isn’t just a catchy slogan — it’s a fundamental strategy for successful software development.

The Cost of Defects: A Financial Reality

Here’s a startling fact that every developer, tester, and project manager should know: the cost of fixing a defect increases exponentially the later it’s found in the development lifecycle.

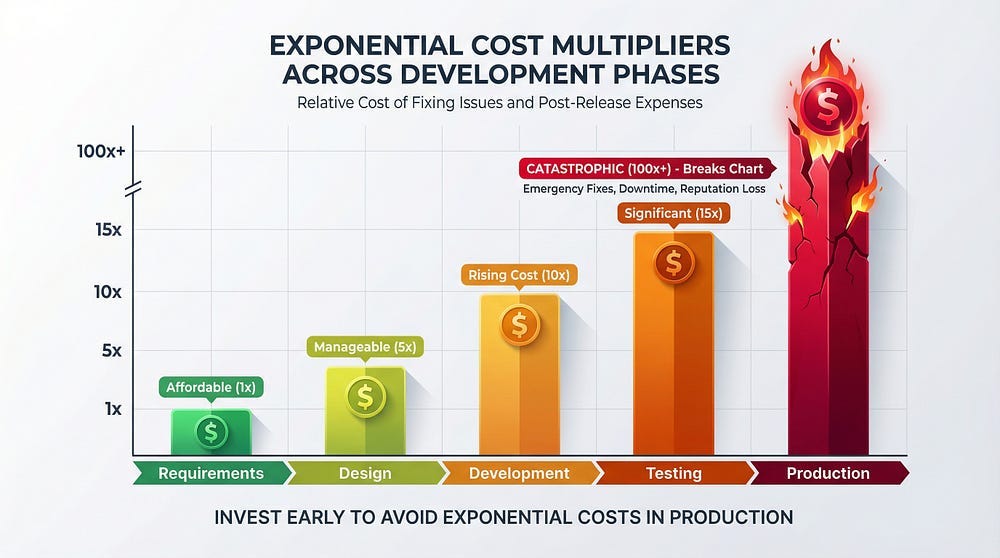

Research consistently shows:

Requirements Phase: 1x cost (baseline)

Finding a defect during requirements review might take 15–30 minutes to fix

Simply updating documentation or clarifying specifications

Design Phase: 5–10x cost

May require redesigning architecture or data models

Multiple documents need updating

Team alignment needed

Development Phase: 10–15x cost

Code already written needs to be rewritten

Unit tests need updating

Integration points affected

Testing Phase: 15–40x cost

More code invested, more dependencies

Regression testing required

Documentation updates

Potential schedule delays

Production: 100–1000x cost or more

Emergency fixes and patches

Potential data corruption or loss

Customer support costs

Reputation damage

Possible legal liability

Lost revenue

Customer churn

Real-World Examples: When Late Detection Costs Everything

Let’s look at concrete examples of how late defect detection impacts real projects:

Example 1: The Banking Transfer Bug

The Defect: A requirements document specified that users could transfer “between $0.01 and $10,000” but didn’t define what happens with exactly $0.00.

If Caught in Requirements (Week 1):

Cost: 5 minutes to clarify and document

Impact: Zero

Actually Caught in Production (Month 6):

Thousands of $0.00 transfers clogged the system

Database filled with meaningless records

Performance degraded

Emergency hotfix required

Database cleanup script needed

Customer confusion and support tickets

Total Cost: $47,000 and 3 days of emergency work

The Lesson: A 5-minute requirements review question could have prevented a $47,000 problem.

Example 2: The Date Format Assumption

The Defect: Developer assumed all users would enter dates in MM/DD/YYYY format. No requirement specified format or validation.

If Caught in Design Review (Week 2):

Cost: 1 hour to specify format requirements

Add input validation to design specs

Impact: Minimal

Actually Caught by Users (Month 4):

European users entering DD/MM/YYYY caused data corruption

Historical data had mixed formats

Reports showed incorrect dates

Data migration script required

Customer trust eroded

Total Cost: $23,000 and 2 weeks of work

The Lesson: Design-phase thinking prevents development-phase disasters.

Example 3: The Missing Error Handling

The Defect: No requirements or design specified what happens when external API calls fail.

If Caught During Code Review (Week 5):

Cost: 2 hours to add proper error handling

Write a few tests

Impact: Minor

Actually Caught in Production (Launch Day):

Application crashed when payment gateway went down

No graceful degradation

Users lost cart data

Launch delayed

Emergency all-hands meeting

Rushed fix introduced new bugs

Total Cost: $156,000, delayed launch, damaged reputation

The Lesson: Code reviews and early testing catch missing scenarios before they become crises.

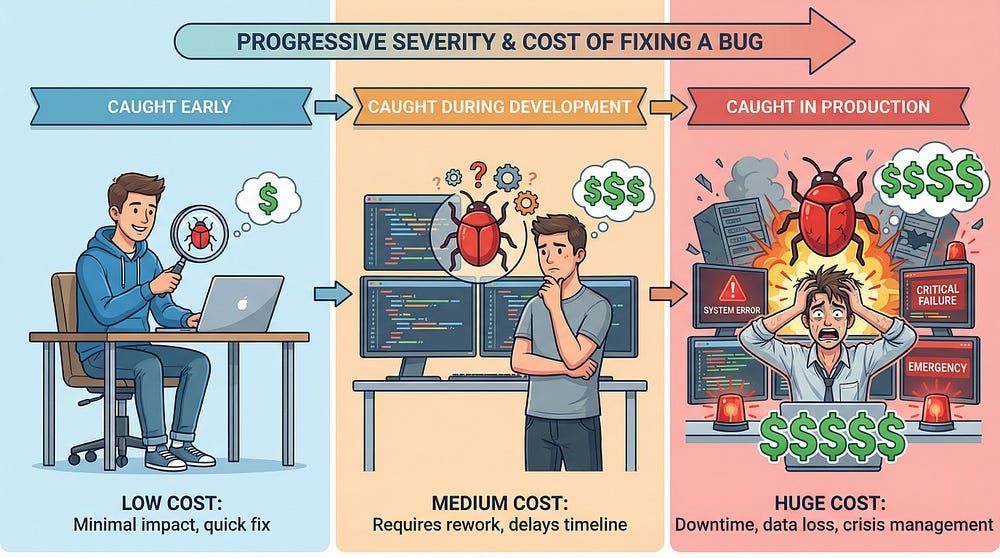

Why Early Detection Saves Money

Understanding why early detection is cheaper helps you advocate for it effectively:

1. Less Code Investment

Early in the project, less code exists. Changing requirements or design doesn’t require rewriting thousands of lines of code.

Example: Changing a database schema in design phase: 1 hour. Changing it after 50,000 lines of code depend on it: 2 weeks.

2. Fewer Dependencies

Early on, components aren’t tightly coupled yet. Changes don’t cascade through the entire system.

Example: Changing an API endpoint signature in design: update the spec. Changing it after 20 services consume it: update 20 services, coordinate deployments, manage versions.

3. Easier Communication

During requirements and design, the whole team is focused on planning. Discussions are expected and encouraged.

Example: Questioning a requirement in a planning meeting: normal collaboration. Questioning it 3 months into development: “Why didn’t you bring this up earlier?”

4. No Emergency Pressure

Early defects are found during calm planning phases. Production defects create panic, rushed fixes, and compounding errors.

Example: Designing proper error handling: thoughtful, tested, peer-reviewed. Emergency production fix: whatever stops the bleeding right now.

5. User Impact

Early defects affect only the development team. Production defects affect real users, causing frustration, lost trust, and potential business loss.

Example: Requirements defect: team discusses it over coffee. Production defect: angry users on social media, support tickets flooding in, executives asking questions.

What “Test Early” Actually Means

“Test early” doesn’t just mean “start test execution sooner.” It means involving testing thinking throughout the entire development lifecycle:

Testing During Requirements

What it looks like:

Reviewing requirements for completeness, clarity, and testability

Asking “how will we verify this?” for each requirement

Identifying ambiguities and missing scenarios

Ensuring requirements are actually testable

Questions testers ask:

“What defines success for this feature?”

“What should happen in error scenarios?”

“Are there any assumptions we’re making?”

“How will users actually use this?”

“What’s the acceptance criteria?”

Value provided:

Catch missing requirements before coding starts

Clarify vague specifications

Identify impossible or contradictory requirements

Ensure requirements address real user needs

Example: A requirement says “The system shall be fast.” A tester asks: “What does ‘fast’ mean? Page load under 2 seconds? API response under 500ms? Can we make this measurable?”

Testing During Design

What it looks like:

Reviewing architecture and design documents

Identifying potential integration issues

Considering testability in design decisions

Planning test data and test environments early

Questions testers ask:

“How will we test this integration point?”

“What happens if this external service is unavailable?”

“Can we simulate this in a test environment?”

“Are there security considerations we should test?”

“Will this design make automation difficult?”

Value provided:

Catch design flaws before implementation

Ensure designs are testable

Plan for test environments and data

Identify security and performance risks

Example: Design shows tight coupling between components. Tester asks: “If we need to test the payment module independently, how will we mock the user authentication dependency?”

Testing During Development

What it looks like:

Developers writing unit tests

Code reviews with quality focus

Continuous integration running automated tests

Exploratory testing of work-in-progress features

Questions testers ask:

“What edge cases did you consider?”

“How did you test error handling?”

“What happens with invalid inputs?”

“Did you test this on different browsers/devices?”

Value provided:

Find bugs before code is “done”

Ensure code meets quality standards

Catch integration issues early

Build test automation alongside features

Example: During code review, a tester notices no validation for negative numbers in an age field. Asks: “Should we add validation here?” Five-minute fix instead of a bug report later.

Testing During Deployment

What it looks like:

Smoke testing in staging environments

Verifying deployment scripts

Checking production monitoring

Ensuring rollback procedures work

Questions testers ask:

“Did the deployment succeed?”

“Are all services running?”

“Is monitoring working?”

“Can we roll back if needed?”

Value provided:

Catch deployment issues before they affect users

Verify production readiness

Ensure monitoring catches real issues

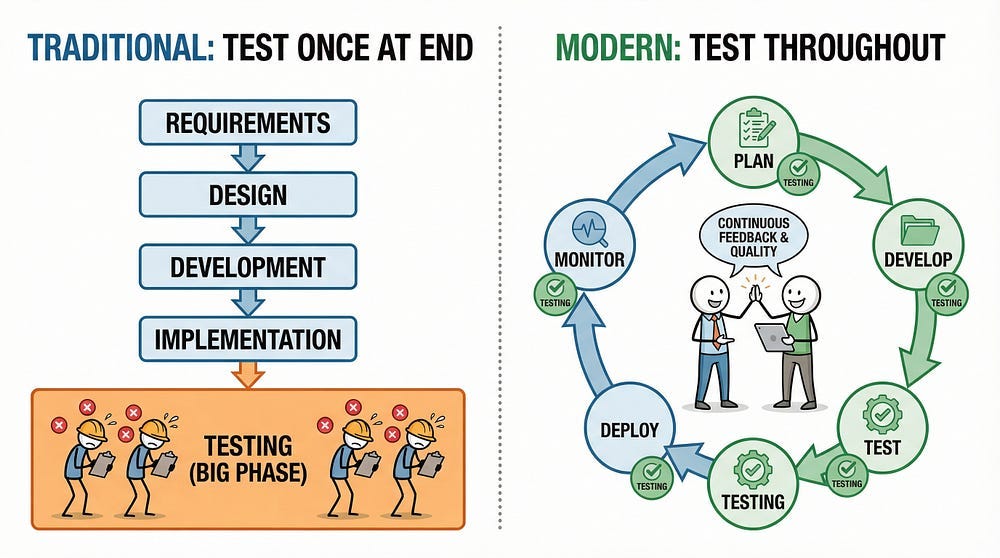

What “Test Often” Actually Means

Testing isn’t a one-time event — it’s a continuous activity:

Continuous Testing in Modern Development

Traditional Approach (Don’t Do This):

Write all code for 6 weeks

Hand to testers

Find 200 bugs

Fix for 4 weeks

Test again

Still find bugs

Scramble to launch

Modern Approach (Do This):

Write feature incrementally

Test immediately

Fix issues same day

Deploy to staging

Test again

Deploy to production

Monitor

Repeat for next feature

Benefits of Testing Often

1. Faster Feedback

Developers get immediate feedback while context is fresh

Issues are easier to fix when recently written

Learning happens in real-time

2. Smaller Change Sets

Testing small changes is easier than testing everything at once

Pinpointing what caused a bug is simpler

Risk is lower per deployment

3. Reduced Integration Pain

Components integrate continuously, not all at once at the end

Integration issues surface immediately

No “big bang” integration nightmares

4. Better Quality

Quality is built in, not tested in

Problems don’t accumulate

Technical debt is minimized

5. Predictable Velocity

No surprise “testing phase” at the end revealing hundreds of bugs

Consistent progress throughout

More accurate estimates

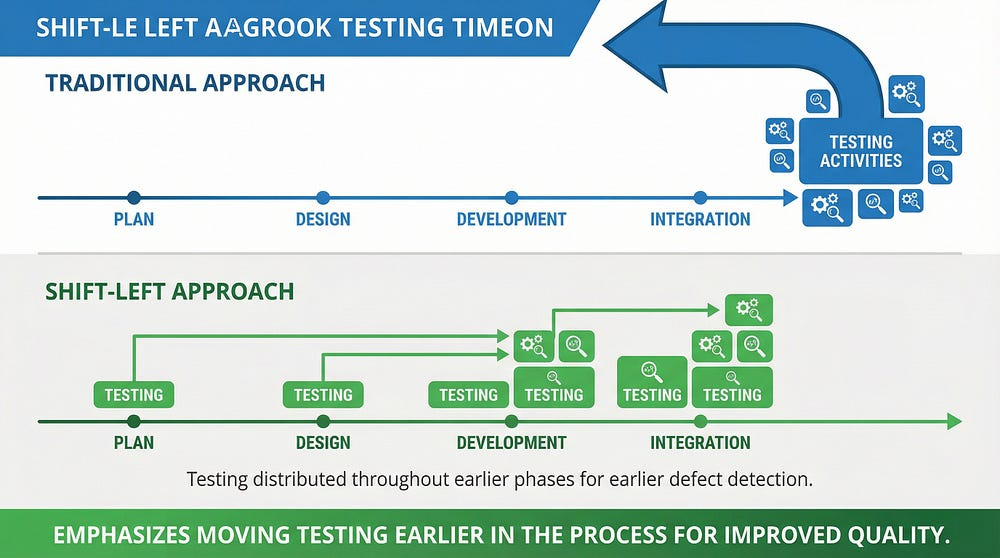

The Shift-Left Movement

“Shift-left testing” means moving testing activities earlier (left) in the development timeline. It’s become a major trend in software development because of its proven cost benefits.

What Shift-Left Includes

1. Requirements Testing

Reviewing and validating requirements before development

Creating acceptance criteria upfront

Defining test scenarios during planning

2. Test-Driven Development (TDD)

Writing tests before writing code

Code is automatically testable

Design emerges from test thinking

3. Behavior-Driven Development (BDD)

Writing executable specifications

Collaboration between business, dev, and test

Shared understanding before coding

4. Continuous Integration/Continuous Deployment (CI/CD)

Automated testing on every code commit

Immediate feedback to developers

Quality gates prevent bad code from advancing

5. Static Analysis

Analyzing code without executing it

Finding potential bugs, security issues, code smells

Catching issues before they become defects

Proven Benefits of Shift-Left

Studies show that organizations practicing shift-left testing experience:

30–40% reduction in defects reaching production

40–50% reduction in overall development costs

50–60% faster time to market

Improved team collaboration and communication

Higher developer satisfaction (fewer emergency fixes)

Better product quality and customer satisfaction

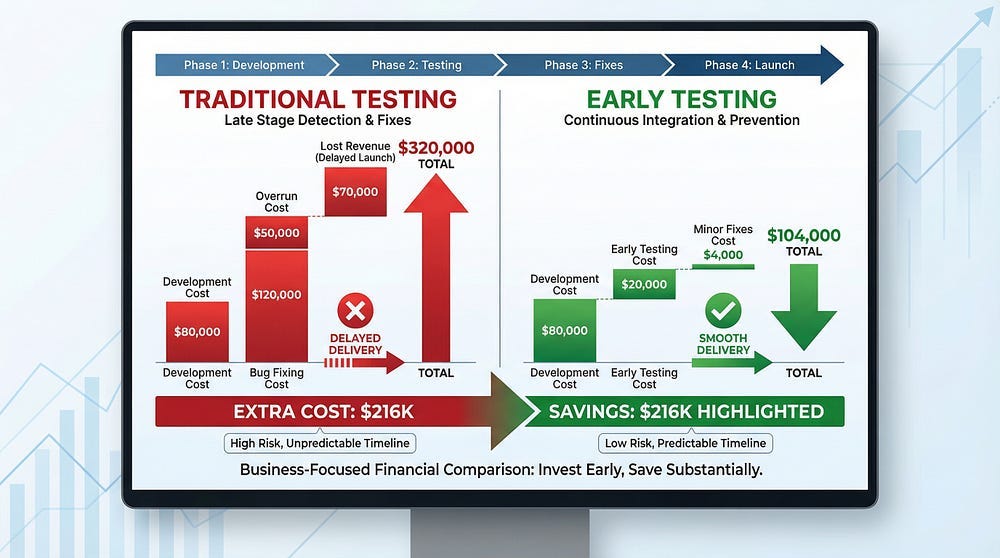

The ROI of Early Testing: Real Numbers

Let’s put actual numbers to the principle:

Scenario: E-Commerce Checkout Feature

Project Details:

New checkout feature for online store

Team of 5 developers, 2 testers

12-week timeline

Expected to process $2M in transactions monthly

Approach A: Traditional Testing (Testing at End)

Week 1–10: Development Week 11–12: Testing

Results:

156 bugs found during testing phase

47 bugs were design/requirements issues requiring significant rework

4 additional weeks needed to fix and retest

18 bugs still found by users in first month

Cost overrun: $89,000

Revenue lost due to cart abandonment bugs: $127,000

Total additional cost: $216,000

Approach B: Early & Continuous Testing

Week 1: Requirements review with testers Week 2–12: Development with continuous testing

Results:

31 potential issues identified during requirements review

89 bugs found during development (fixed same week)

12 bugs found during final testing

3 bugs found by users in first month

Project delivered on time

Additional cost: $0

Revenue protection: $127,000

Net Benefit of Early Testing: $216,000 for one feature

Common Objections and Responses

When advocating for early testing, you’ll hear objections. Here’s how to address them:

Objection 1: “We don’t have time for early testing”

Response: You don’t have time NOT to test early. Every hour spent on early testing saves 3–10 hours later. Would you rather spend 10 hours reviewing requirements or 100 hours fixing bugs those unclear requirements caused?

Data: Studies show projects with requirements review have 40–50% fewer defects and finish 30% faster.

Objection 2: “Requirements always change anyway”

Response: True, but early testing helps identify which requirements are incomplete or likely to change. It’s cheaper to change requirements documentation than to change built code.

Reality: Requirements will change. But testing early ensures changes are intentional and understood, not discovered accidentally through bugs.

Objection 3: “Developers should test their own code”

Response: Absolutely! Developers testing their own code IS early testing. But we also need independent testing because of the cognitive biases we discussed in Article 4. Both are valuable.

Clarification: Early testing includes developer testing, code reviews, pair programming, AND independent tester involvement.

Objection 4: “We’ll test in the dedicated testing phase”

Response: There shouldn’t be a “dedicated testing phase.” Testing should be continuous. Waiting creates the exact exponential cost increase we’re trying to avoid.

Alternative: Think of “testing phase” as final verification, not the first time anyone tests anything.

Objection 5: “Our testers don’t have technical skills for early testing”

Response: Early testing doesn’t always require technical skills. Requirements review needs domain knowledge and critical thinking. That said, investing in tester technical skills pays enormous dividends.

Action: Start with what your testers can do now (requirements review, design feedback) while building technical skills for earlier involvement.

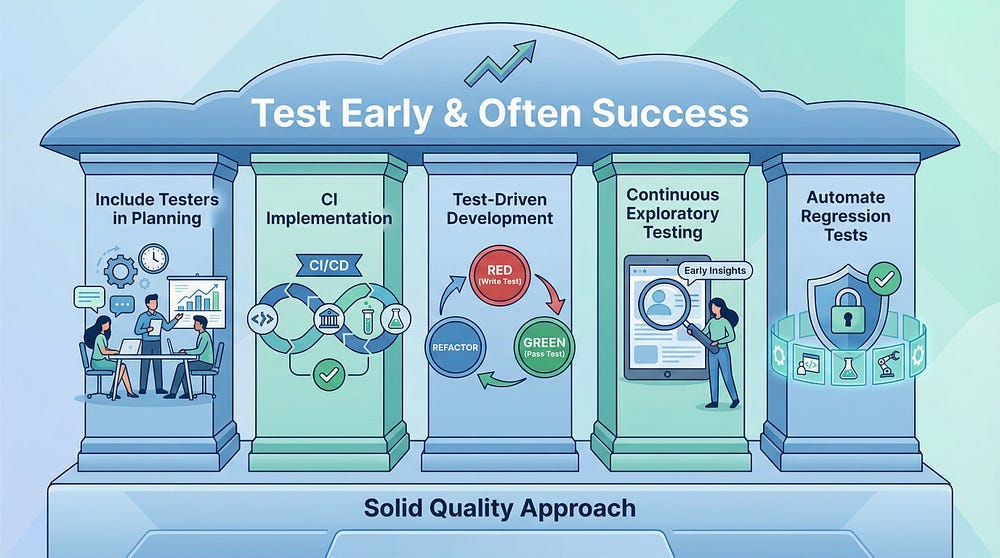

Practical Strategies for Testing Early and Often

Here’s how to actually implement this in your projects:

Strategy 1: Include Testers in Planning

How:

Invite testers to requirements meetings

Ask testers to review user stories before sprint planning

Have testers create acceptance criteria

Discuss testability during design reviews

Benefit: Catches issues before any code is written

Strategy 2: Implement Continuous Integration

How:

Set up automated builds on every code commit

Run unit tests automatically

Run integration tests in staging environment

Block merges if tests fail

Benefit: Immediate feedback, bugs caught within hours not weeks

Strategy 3: Practice Test-Driven Development

How:

Write test first describing desired behavior

Write minimal code to make test pass

Refactor while keeping tests passing

Repeat

Benefit: Code is automatically testable, design improves, confidence increases

Strategy 4: Do Continuous Exploratory Testing

How:

Testers access work-in-progress features

Explore new functionality as soon as it’s minimally usable

Report issues immediately (not waiting for “code complete”)

Quick feedback loop with developers

Benefit: Real-world usage insights while easy to change

Strategy 5: Automate Regression Tests

How:

Build test automation alongside features

Run automated tests frequently

Treat test code with same care as production code

Maintain and update tests continuously

Benefit: Confidence that changes don’t break existing functionality

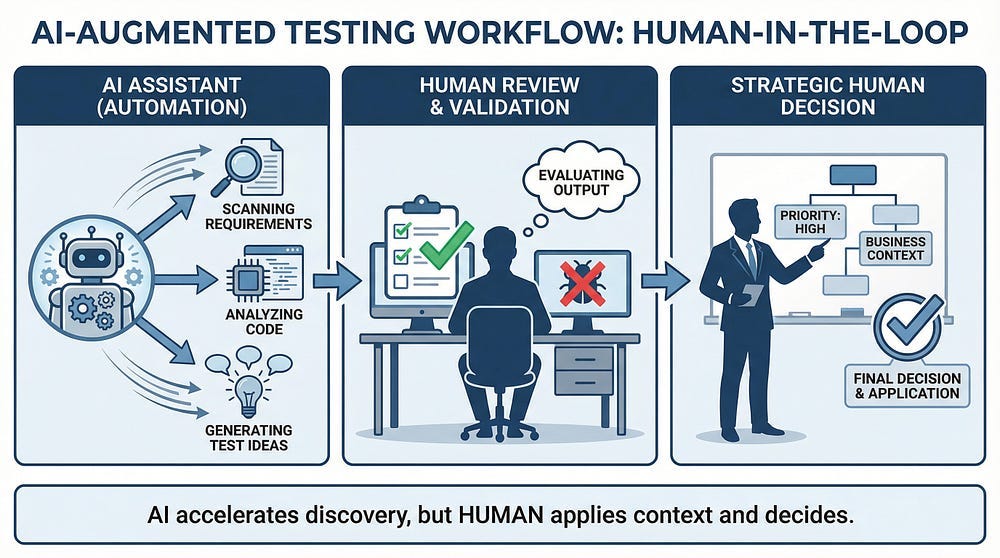

The Role of AI in Early Testing

AI tools are making early testing even more effective:

How AI Supports Early Testing

Requirements Analysis:

AI can scan requirements for ambiguity and incompleteness

Suggest test scenarios based on requirements

Identify contradictions or missing information

Test Case Generation:

Generate initial test cases early in the process

Suggest edge cases and error scenarios

Create test data automatically

Code Analysis:

Static analysis tools catch issues during development

AI suggests potential bugs before code is even run

Security vulnerability scanning

Continuous Monitoring:

AI analyzes production logs to predict potential issues

Identifies patterns that might indicate future problems

Proactive rather than reactive

But Remember: Trust But Verify

AI can accelerate early testing, but it has limitations:

AI can’t:

Understand business context and priorities

Evaluate requirements for business value

Determine what’s “good enough”

Replace human creativity in finding unusual scenarios

Your role:

Use AI to find obvious issues faster

Apply your expertise to evaluate AI suggestions

Focus your human effort on what AI misses

Make strategic decisions about risk and priority

Measuring the Impact of Early Testing

To prove the value of early testing in your organization, track these metrics:

Key Metrics to Track

1. Defect Detection Rate by Phase

How many bugs found in requirements vs design vs development vs testing vs production?

Goal: Shift the curve left over time

2. Cost Per Defect by Discovery Phase

How much does it cost to fix bugs found at different stages?

Shows the economic impact clearly

3. Escaped Defects

How many bugs reach production?

Target: Decrease over time as early testing improves

4. Time to Fix

How long from bug discovery to fix?

Earlier detection = faster fixes

5. Cycle Time

How long from feature start to production?

Early testing should decrease overall cycle time

6. Rework Percentage

What percentage of work is redoing/fixing vs new development?

Lower rework indicates better early testing

Before and After Comparison

Track metrics for 3 months before implementing early testing practices, then compare to 3 months after:

Example Results:

Defects found in requirements/design: 15% → 45%

Defects found in production: 12% → 3%

Average time to fix defect: 8 hours → 2 hours

Project timeline predictability: 60% → 90%

Team stress levels: High → Moderate

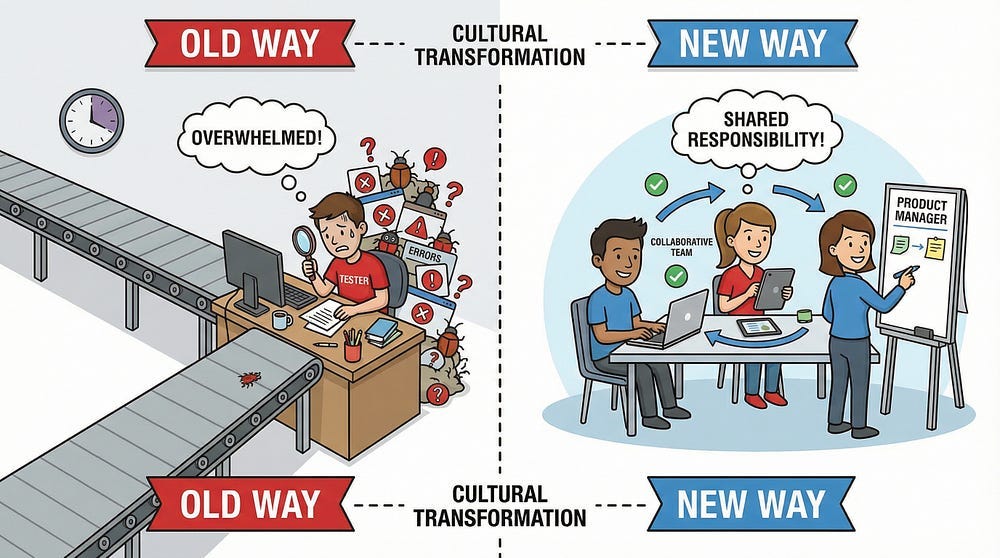

Cultural Shift Required

Implementing early and frequent testing isn’t just a process change — it requires a cultural shift:

From “Testing Phase” to “Testing Throughout”

Old mindset:

“Testing happens at the end”

“Testers test, developers develop”

“We’ll fix bugs later”

“Testing slows us down”

New mindset:

“Testing happens continuously”

“Quality is everyone’s responsibility”

“We fix bugs immediately”

“Testing speeds us up by preventing expensive late fixes”

Building the Culture

Leadership support:

Executives understand and communicate the economic benefits

Managers allocate time for early testing activities

Success is measured by quality, not just speed

Team collaboration:

Developers and testers work together, not in silos

Mutual respect for different perspectives

Shared ownership of quality

Process changes:

Testing is included in estimation and planning

Definition of Done includes testing

Code reviews are standard practice

Continuous integration is non-negotiable

Your Action Steps

This week, practice early testing thinking:

Review something early: Find a requirements document, design spec, or user story. Review it looking for ambiguities, missing scenarios, or testability issues. Document what you find.

Estimate the cost: Think of a bug you recently encountered. Estimate: when was it introduced, when was it found, and what would it have cost to fix at each phase in between?

Map your process: Draw your current development process. Where does testing happen? Identify opportunities to shift testing left.

Have a conversation: Talk to a developer about finding a bug earlier vs later. Share the cost multiplier concept. Get their perspective.

Track one metric: Choose one metric from the measuring section and start tracking it. You need baseline data to show improvement.

Moving Forward

Testing early and often isn’t just best practice — it’s economic necessity. The cost multipliers are real, the benefits are proven, and the competitive advantage is significant.

Every organization that embraces early testing experiences:

Lower costs

Faster delivery

Higher quality

Less stress

Happier customers

More satisfied teams

The question isn’t whether to test early — it’s how quickly you can shift your organization in that direction.

In our next article, we’ll explore Exhaustive Testing is Impossible — understanding why you can’t test everything and how to make strategic decisions about where to focus your testing efforts. This connects directly to today’s topic: since you can’t test everything, testing early ensures you catch the most important issues when they’re cheapest to fix.

Remember: Spending one hour on early testing saves ten hours—and thousands of dollars—fixing problems later.