Static vs Dynamic Testing

Two Sides of the Quality Coin

Welcome back to NextGen QA! In our last article, we explored verification versus validation — the difference between building it right and building the right thing. Today, we’re diving into another fundamental distinction that every tester must understand: static versus dynamic testing.

Here’s a question that might surprise you: What if I told you that some of the most catastrophic software failures in history — failures that cost billions of dollars and even human lives — could have been prevented without ever running the software?

That’s the power of understanding when to test code by examining it versus when to test it by executing it.

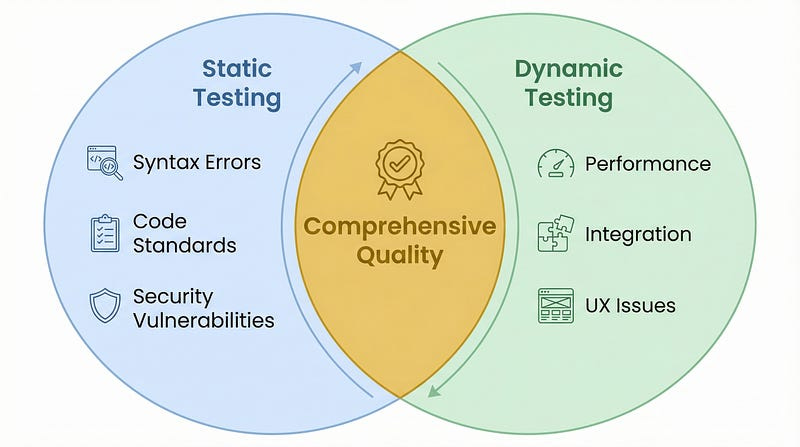

The Core Distinction: Looking vs. Running

Static testing examines software artifacts without executing the code. Think of it like a building inspector reviewing blueprints before construction begins — they’re looking for structural issues, code violations, and design flaws on paper.

Dynamic testing evaluates software by running it and observing its behavior. This is like stress-testing that building after it’s constructed — checking if the doors open properly, if the electrical system works, if it can withstand an earthquake.

Both approaches are essential. Neither alone is sufficient.

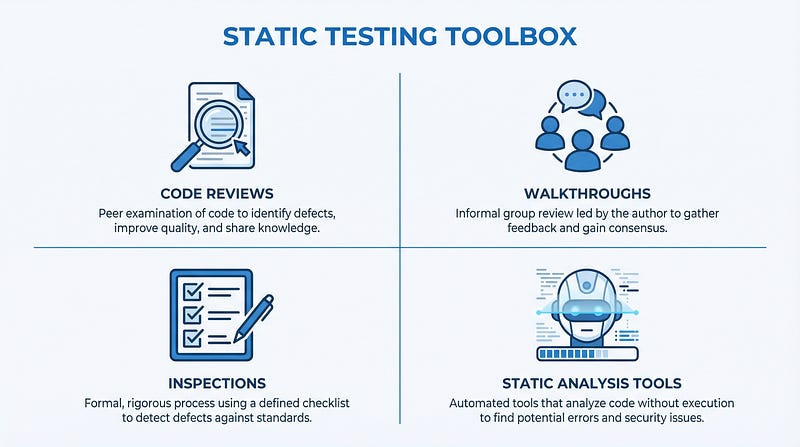

What Static Testing Catches

Static testing shines at finding issues that exist in the code’s structure and logic — problems that are there whether or not the code runs:

Syntax errors and typos — The obvious stuff that would crash your program

Code standard violations — Deviations from agreed-upon coding practices

Security vulnerabilities — Buffer overflows, SQL injection risks, hardcoded credentials

Logic errors — Algorithms that won’t produce correct results

Design flaws — Architectural problems that will cause issues at scale

Requirements gaps — Missing functionality or contradictory specifications

What Dynamic Testing Catches

Dynamic testing reveals issues that only manifest when code executes — problems that emerge from the interaction between components, systems, and real-world conditions:

Functional defects — Features that don’t work as expected

Integration problems — Components that fail when connected

Performance issues — Slowness, memory leaks, resource exhaustion

Race conditions — Timing-dependent bugs that only appear under specific circumstances

Environment-specific bugs — Issues that only occur in production-like settings

User experience problems — Confusing interfaces, poor workflows

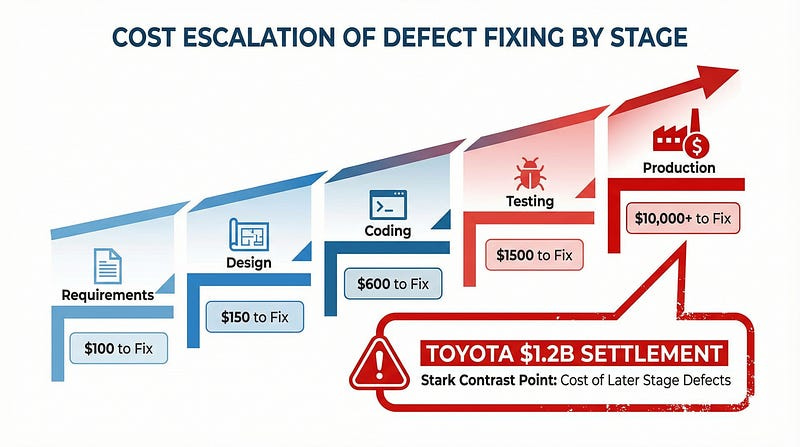

When Static Testing Could Have Saved Lives: The Toyota Case

Between 2009 and 2011, Toyota faced a crisis that would cost them over $1.2 billion in settlements and trigger recalls affecting more than 10 million vehicles. The culprit? Their Electronic Throttle Control System software.

When NASA engineers investigated the code, what they found was staggering: over 7,000 violations of MISRA-C coding standards when checking just 35 rules. An independent expert witness found over 81,000 violations when checking against the full MISRA 2004 standard.

These weren’t obscure, theoretical issues. MISRA-C guidelines exist specifically to prevent the kinds of bugs that cause unintended acceleration, system lockups, and safety failures. Static analysis tools could have flagged every single one of these violations — before any vehicle ever left the factory.

NASA also found that Toyota had never performed worst-case execution timing (WCET) analysis — a static technique that verifies software will always complete its tasks within required time limits. In safety-critical systems like vehicle controls, this oversight is nearly unforgivable.

When Dynamic Testing Saves the Day: The Knight Capital Catastrophe

On August 1, 2012, Knight Capital deployed a trading algorithm that would lose the company $440 million in just 45 minutes — effectively destroying the firm.

The root cause? They ran end-to-end tests on seven servers but missed the eighth. That eighth server contained old, dormant code that was accidentally reactivated during deployment. Without proper dynamic testing of the complete production environment, this time bomb went undetected.

Static analysis wouldn’t have caught this — the old code was syntactically correct and had worked fine years earlier. What was needed was integration testing and failure mode testing across the complete system. Dynamic testing in a production-mirror environment would have revealed the dormant code’s reactivation before it could wreak havoc.

The Numbers Don’t Lie: Efficiency Comparison

Research consistently shows that static and dynamic testing have different strengths:

Formal code inspections (a static technique) detect approximately 60% of defects and find about 5 defects per hour of review time.

Dynamic testing catches different types of defects — particularly integration and runtime issues — but typically finds fewer than 3 defects per hour.

But here’s the critical insight: they find different bugs. Static testing excels at finding structural issues early, while dynamic testing catches behavioral problems that only emerge during execution. Using both approaches provides significantly better coverage than either alone.

Practical Application: Building Your Testing Strategy

Here’s how to leverage both approaches effectively:

During Development (Static Focus):

• Integrate static analysis tools into your IDE — catch issues as you type

• Conduct code reviews before merging — fresh eyes catch what tools miss

• Use pre-commit hooks to enforce coding standards automatically

• Review requirements and design documents for gaps and contradictions

During Testing (Dynamic Focus):

• Run unit tests with every build — catch regressions immediately

• Perform integration testing when components are combined

• Execute system testing in production-mirror environments

• Include performance and security testing in your test suite

The AI Angle: Trust But Verify

AI tools are increasingly capable of assisting with both static and dynamic testing. AI-powered static analyzers can find complex patterns that rule-based tools miss. AI can generate test cases and even predict where bugs are likely to hide.

But remember our core principle: trust but verify. AI suggestions for code improvements need human review. AI-generated test cases need validation. AI predictions about bug locations need confirmation through actual testing.

The best approach combines AI efficiency with human judgment — using AI to accelerate both static and dynamic testing while maintaining the critical thinking that catches what algorithms miss.

Key Takeaways

1. Static testing examines code without running it — catching structural issues early and cheaply.

2. Dynamic testing executes code to observe behavior — revealing runtime and integration issues.

3. Neither approach alone is sufficient — they find fundamentally different types of defects.

4. Earlier detection means cheaper fixes — static testing’s biggest advantage is timing.

5. Real-world disasters prove both approaches are essential — Toyota needed better static testing; Knight Capital needed better dynamic testing.

What’s Next?

Understanding static versus dynamic testing is foundational, but knowing which tests to run is equally important. In our next article, we’ll explore “What Makes a Good Tester?” — the essential skills and mindset traits that separate great testers from the rest.

Until then, look at your current testing approach: Are you balancing static and dynamic techniques effectively? Or is one side of the quality coin getting neglected?

Keep questioning, keep testing, and always remember — quality is everyone’s responsibility.

Excellent framing with the Toyota and Knight Capital examples. The coin metaphor works perfectly cause it captures how these approaches cover different failure modes rather than just beingredundant. I've seen teams skip static analysis thinking their test coverage is good enough, but they end up chasing bugs that could've been caught before a sinlge line executed.